Term of the Moment

BYOS

Definition: long-horizon context

The capability of an AI model to analyze a huge amount of information beyond the current "context window," which is essentially the amount of memory available to the system.

Training and Inference

Long-horizon context is constantly being improved for both training and inference. For example, larger context windows see dependencies over a greater amount of training data. Improved long-horizon context ensures results are delivered faster when generating answers at the inference stage. See AI training vs. inference.

Context GPUs

Context GPUs are specially designed GPUs that handle large amounts of memory and function as a first processing stage. Generally optimized for inference, the data are passed onto the GPUs that perform massively parallel computations. See GPU.

Context and AI GPUs

Training and Inference

Long-horizon context is constantly being improved for both training and inference. For example, larger context windows see dependencies over a greater amount of training data. Improved long-horizon context ensures results are delivered faster when generating answers at the inference stage. See AI training vs. inference.

Context GPUs

Context GPUs are specially designed GPUs that handle large amounts of memory and function as a first processing stage. Generally optimized for inference, the data are passed onto the GPUs that perform massively parallel computations. See GPU.

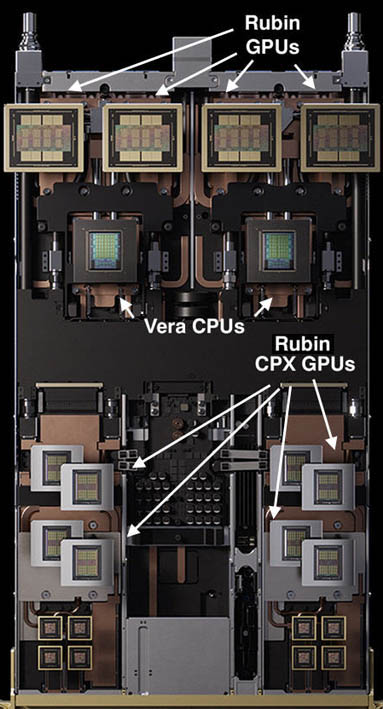

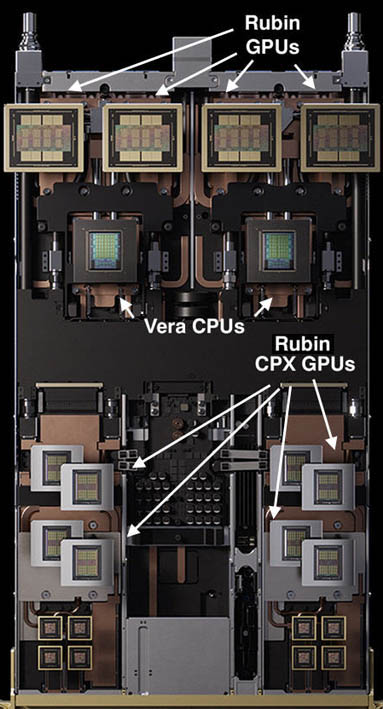

NVIDIA combines its CPX context GPUs with AI GPUs (Rubin GPUs) on this compute tray. Along with switch trays, as many as 18 Vera Rubin trays are installed in one server rack (see inference engine and Vera Rubin). (Image courtesy of NVIDIA.)