Term of the Moment

Gigabit Ethernet

Definition: AI chip

(1) For Google's AI chips, see Tensor Processing Unit and Tensor chip.

(2) For AMD's AI chips, see AMD Instinct.

(3) For Amazon's AI chips, see Amazon AI chips.

(4) For NVIDIA's first AI chips, see GPU and CUDA.

(5) For Groq's AI language processing unit (LPU), see Groq.

(6) For the AI image generation chip, see Normal Computing.

(7) For the photonic AI chip, see Native Processing Unit.

(8) For wafer-scale AI chips, see Cerebras AI computer.

(9) For low-power AI chips, see Normal Computing and Native Processing Unit.

(10) The primary AI chip is the graphics processing unit (GPU). As desktop computers became more powerful in the late 1990s, the GPU debuted to render graphics onto the screen for animation and video, especially for gamers. Because the GPU performs parallel operations, it became the logical processor for AI training and execution (inference), both of which require massive amounts of mathematical calculations in parallel. See AI training vs. inference and GPU.

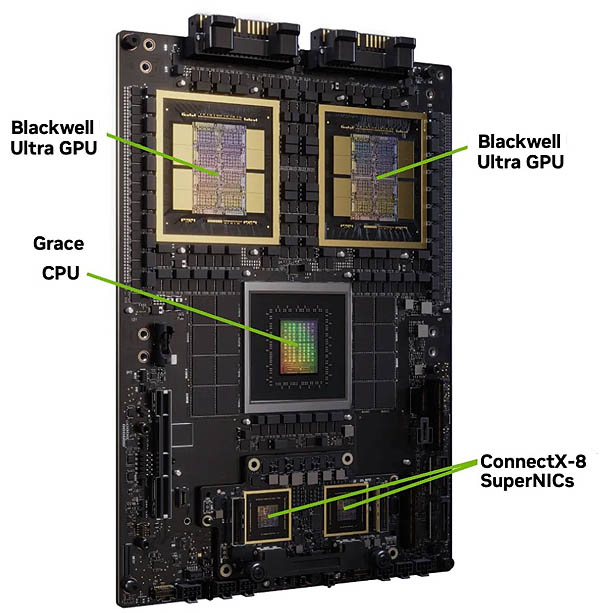

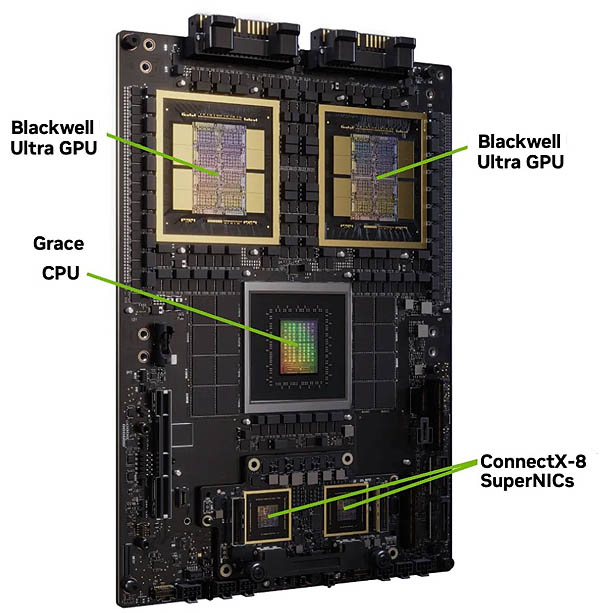

NVIDIA Grace Blackwell Superchip

The Versal System-on-Chip

(2) For AMD's AI chips, see AMD Instinct.

(3) For Amazon's AI chips, see Amazon AI chips.

(4) For NVIDIA's first AI chips, see GPU and CUDA.

(5) For Groq's AI language processing unit (LPU), see Groq.

(6) For the AI image generation chip, see Normal Computing.

(7) For the photonic AI chip, see Native Processing Unit.

(8) For wafer-scale AI chips, see Cerebras AI computer.

(9) For low-power AI chips, see Normal Computing and Native Processing Unit.

(10) The primary AI chip is the graphics processing unit (GPU). As desktop computers became more powerful in the late 1990s, the GPU debuted to render graphics onto the screen for animation and video, especially for gamers. Because the GPU performs parallel operations, it became the logical processor for AI training and execution (inference), both of which require massive amounts of mathematical calculations in parallel. See AI training vs. inference and GPU.

AI "factories" may have hundreds of liquid-cooled server racks, each holding 36 of these Superchips that cost tens of thousands of dollars. Everything is connected via NVIDIA's NVLink. (Image courtesy of NVIDIA Corporation.)

Today's chips often include AI processing. This Versal system-on-chip (SoC) contains more than 30 billion transistors and provides circuits for AI processing (green area). It also contains programmable hardware, which means the actual circuits are programmed at startup, a rarity on any SoC (red area). See SoC, FPGA and Versal. (Image courtesy of AMD.)